When you’ve been hit by Panda it’s extremely important to quickly identify the root causes of the attack. I typically jump into a deep crawl analysis of the site while performing an extensive audit through the lens of Panda. The result is a remediation plan covering a number of core website problems that need to be rectified sooner than later.

And for larger-scale websites, the remediation plan can be long and complex. It’s one of the reasons I tend to break up the results into smaller pieces as the analysis goes on. I don’t want to dump 25 pages of changes into the lap of a business owner or marketing team all at one time. That can take the wind out of their sails in a hurry.

But just because problems have been identified, and a remediation plan mapped out, it does not mean all is good in Panda-land. There may be times that serious problems cannot be easily resolved. And if you can’t tackle low-quality content on a large-scale site hit by Panda, you might want to get used to demoted rankings and low traffic levels.

When Your CMS Is the Problem

One problem in particular that I’ve come across when dealing with Panda remediation is the dreaded content management system (CMS) obstacle. And I’m using “CMS” loosely here, since some internal systems are not actually content management systems. They simply provide a rudimentary mechanism for getting information onto a website. There’s a difference between that and a full-blown CMS. Regardless, the CMS being used can make Panda changes easy, or it can make them very hard. Each situation is different, but again, it’s something I’ve come across a number of times while helping clients.

When presenting the remediation plan to a client’s team, there are usually people representing various aspects of the business in the meeting. There might be people from marketing, sales, development, IT and engineering, and even C-level executives on the call. And that’s awesome. In my opinion, everyone needs to be on the same page when dealing with an issue as large as Panda.

But at times IT and engineering has the task of bringing a sense of reality with regard to how effectively changes can be implemented. And I don’t envy them for being in that position. There’s a lot of traffic and revenue on the line, and nobody wants to be the person that says, “we can’t do that.”

For example, imagine you surfaced 300,000 pages of thin content after getting pummeled by Panda. The pages have been identified, including the directories that have been impacted, but the custom CMS will not enable you to easily handle that content. When the CMS limitations are explained, the room goes silent.

That’s just one real-world example I’ve come across while helping companies with Panda attacks. It’s not a comfortable situation, and absolutely needs to be addressed.

Trapped With Panda Problems

So what types of CMS obstacles could you run into when trying to recover from Panda? Unfortunately, there are many, and they can sometimes be specifically tied to your own custom CMS. Below, I’ll cover five problems I’ve seen first-hand while helping clients with Panda remediation. Note, I can’t cover all potential CMS problems that can inhibit Panda recovery, but I did focus on five core issues. Then I’ll cover some tips for overcoming those obstacles.

1. 404s and 410s

When you hunt down low-quality content, and have a giant list of URLs to nuke (remove from the site), you want to issue either 404 or 410 header response codes. So you approach your dev team and explain the situation. But unfortunately for some content management systems, it’s not so easy to isolate specific URLs to remove. And if you cannot remove those specific low-quality URLs, you may never escape the Panda filter. It’s a catch 22 with a Panda rub.

In my experience, I’ve seen CMS packages that could 404 pages, but only by major category. So you would be throwing the baby out with the bath water. When you nuke a category, you would be nuking high-quality content along with low-quality content. Not good, and defeats the purpose of what you are trying to accomplish with your Panda remediation.

I’ve also seen CMS platforms that could only remove select content from a specific date forward or backward. And that’s not good either. Again, you would be nuking good content with low-quality content, all based on date. The goal is to boost the percentage of high-quality content on your site, not to obliterate large sections of content that can include both high- and low-quality URLs.

2. Meta Robots Tag

Similar to what I listed above, if you need to noindex content (versus remove), then your CMS must enable you to dynamically provide the meta robots tag. For example, if you find 50,000 pages of content on the site that is valuable for users to access, but you don’t want the content indexed by Google, you could provide the meta robots tag on each page using “noindex, follow.” The pages won’t be indexed, but the links on the page would be followed. Or you could use “noindex, nofollow” where the pages wouldn’t be indexed and the links wouldn’t be followed. It depends on your specific situation.

But once again, the CMS could provide obstacles to getting this implemented. I’ve seen situations where once a meta robots tag is used and in the page’s code, it’s impossible to change. Or I’ve seen multiple meta robots tags used on the page in an effort to noindex content that’s low quality.

And beyond that, there have been times where the meta robots tag isn’t even an option in the CMS. That’s right, you can’t issue the tag even if you wanted to. Or, similar to what I explained earlier, you can’t selectively use the tag. It’s a category or class-level directive that would force you to noindex high-quality content along with low-quality content. And we’ve already covered why that’s not good.

The meta robots tag can be a powerful piece of code in SEO, but you need to be able to use it correctly and selectively. If not, it can have serious ramifications.

3. Nofollow

The proper use of nofollow crosses algorithms, and I’ll include it in this post as I’ve encountered nofollow problems during Panda projects. But this can help across link penalties and Penguin situations too. And let’s hope your CMS cooperates when you need it to.

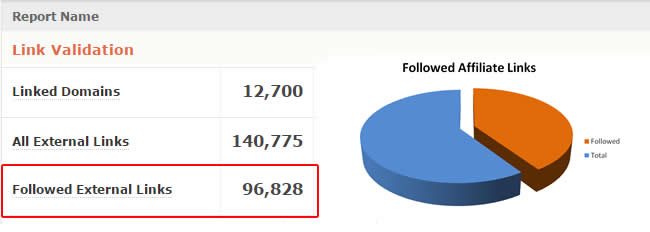

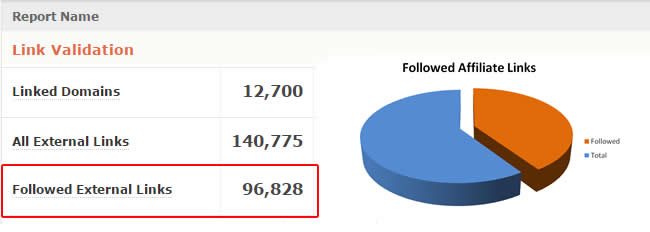

For example, I’ve helped some large affiliate websites that had a massive followed links problem. Affiliate links should be nofollowed and should not flow PageRank to destination websites (where there is a business relationship). But what if you have a situation where all, or most of, your affiliate links were followed? Let’s say your site has 2 million pages indexed and contains many followed affiliate links to e-commerce websites. The best way to handle this situation is to simply nofollow all affiliate links throughout the content, while leaving any natural links intact (followed). That should be easy, right? Not so fast…

What seems like a quick fix via a content management system could turn out to be a real headache. Some custom CMS platforms can only nofollow all links on the page, and that’s definitely not what you want to do. You only want to selectively nofollow affiliate links.

In other situations, I’ve seen CMS packages only be able to nofollow links from a certain date forward, as upgrades to the CMS finally enabled selective nofollows. But what about the 400,000 pages that were indexed before that date? You don’t want to leave those as-is if there are followed affiliate links. Again, a straightforward situation that suddenly becomes a challenge for business owners dealing with Panda.

4. Rel Canonical

There are times that a URL gets replicated on a large-scale website (for multiple reasons). So that one URL turns into four or five URLs (or more). And on a site that houses millions of pages of content, the problem could quickly get out of control. You could end up with tens of thousands, hundreds of thousands, or even millions of duplicate URLs.

You would obviously want to fix the root problem of producing non-canonical versions of URLs, but I won’t go down that path for now. Let’s just say you wanted to use the canonical URL tag on each duplicate URL pointing to the canonical URL. That should be easy, right? Again, not always…

I’ve seen some older CMS packages not support rel canonical at all. Then you have similar situations to what I explained above with 404s and noindex. Basically, the CMS is incapable of selectively issuing the canonical URL tag. It can produce a self-referencing href (pointing to itself), but it can’t be customized. So all of the duplicate URLs might include the canonical URL tag, but they are self-referencing. That actually reinforces the duplicate content problem… instead of consolidating indexing properties to the canonical URL.

By the way, I wrote a post in December covering a number of dangerous rel canonical problems that can cause serious issues. I recommend reading that post if you aren’t familiar with how rel canonical can impact your SEO efforts.

5. Robots.txt

And last, but not least, I’ve seen situations where a robots.txt file had more security and limitations around it than the President of the United States. For certain CMS packages or custom CMS platforms, there are times the robots.txt file can only contain certain directives, while only being implemented via the CMS itself (with no customization possible).

For example, maybe you can only disallow major directories on the site, but not specific files. Or maybe you can disallow certain files, but you can’t use wildcards. By the way, the limitations might have been put in place via the CMS developers with good intentions. They understood the power of robots.txt, but didn’t leave enough room for scalability. And they definitely didn’t have Panda in mind, especially since some of the content management systems I’ve come across were developed prior to Panda hitting the scene!

In other robots.txt situations, I’ve seen custom changes get wiped out nightly (or randomly) as the CMS pushes out the latest robots.txt file automatically. Talk about frustrating. Imagine customizing a robots.txt file only to see it revert back at midnight. It’s like a warped version of Cinderella, only this time it’s a bamboo slipper and the prince of darkness. Needless to say, it’s important to have control of your robots.txt file. It’s an essential mechanism for controlling how search bots crawl your website.

What Could You Do?

When you run into CMS limitations while working on Panda remediation, you have several options for moving forward. The path you choose to travel completely depends on your own situation, the organization you work for, budget limitations, and resources. Below, I’ll cover a few ways you can move forward, based on helping a number of clients with similar situations.

1. Modify the CMS

This is the most obvious choice when you run into CMS functionality problems. And if you have the development chops in-house, then this can be a viable way to go. You can identify all of the issues SEO-wise that the CMS is producing, map out a plan of attack, and develop what you need. Then you can thoroughly test in a staging environment and roll out the new and improved CMS over time.

By tackling the root problems (the CMS functionality itself), you can be sure that the site will be in much better shape SEO-wise, not only in the short-term, but over the long-term as well. And if developed with the future in mind, then the CMS will be open to additional modifications as more technical changes are needed.

The downside is you’ll need seasoned developers, a budget, the time to work on the modifications, test them, debug problems, etc. Some organizations are large enough to take on the challenge and the cost, while other smaller companies will not. In my experience, this has been a strong path to take when dealing with CMS limitations SEO-wise.

2. Migrate to a New CMS

I can hear you groaning about this one already. 🙂 In serious situations, where the CMS is so bad and so limiting, some companies choose to move to an entirely new CMS. Remember, Panda hits can sometimes suck the life out of a website, so grave SEO situations sometimes call for hard decisions. If the benefits of migrating to a new CMS far outweigh the potential pitfalls of the migration SEO-wise, then this could be a viable way to go for some companies.

But make no bones about it, you will now be dealing with a full-blown CMS migration. And that bring a number of serious risks with it. For example, you’ll need to do a killer job of migrating the URLs, which includes a solid redirection plan. You’ll need to ensure valuable inbound links don’t get dropped along the way. You’ll need to make sure the user experience doesn’t suffer (across devices). And you’ll have a host of other concerns and mini-projects that come along with a redesign or CMS migration.

For larger-scale sites, this is no easy feat. Actually, redesigns and CMS migrations are two of the top reasons I get calls about significant drops in organic search traffic. Just understand this before you pull the trigger on migrating to a new CMS. It’s not for the faint of heart.

3. Project Frankenstein – Tackle What You Can, and When You Can

Panda is algorithmic, and algorithms are all about percentages. In a perfect world, you would tackle every possible Panda problem riddling your website. But in reality, some companies cannot do this. But you might be able to still recover from Panda without tackling every single problem. Don’t get me wrong, I’ve written before about band-aids not being a long-term solution for Panda recovery, but if you can tackle a good percentage of problems, then you might rid yourself of the Panda filter.

Let me emphasize that this is not the optimal path to take, but if you can’t take any other path, then do your best with Project Frankenstein.

For example, if you can’t make significant changes to your CMS (development-wise), and you can’t migrate to a new CMS, then maybe you can still knock out some tasks in the remediation plan that remove a good amount of thin and low-quality content. I’ve had a number of clients in this situation over the years, and this approach has worked for some of them.

As a quick example, one client focused on four big wins based on the remediation plan I mapped out. They were able to nuke 515,000 pages of thin and low-quality content from the site based on just one find from the crawl analysis and audit. Now, it’s a large site, but that’s still a huge find Panda-wise. And when you added the other three items they could tackle from the remediation plan, the total amount of low-quality content removed from the site topped 600,000 pages.

So although Frankenstein projects aren’t sexy or ultra-organized, they still have a chance of working from a Panda remediation standpoint. Just look for big wins that can be forced through. And try and knock out large chunks of low-quality content while publishing high-quality content on a regular basis. Again, it’s about percentages.

Summary – Don’t Let Your CMS Inhibit Panda Recovery

Panda remediation is tough, but it can be exponentially tougher when your content management system (CMS) gets in the way. When you’ve been hit by Panda, you need to work hard to improve content quality on your website (which means removing low-quality content, while also creating or boosting high-quality content.) Don’t let your CMS inhibit a Panda recovery by placing obstacles in your way. Instead, understand the core limitations, meet with your dev and engineering teams to work through the problems, and figure out the best way to overcome those obstacles. That’s a strong, long-term approach to ridding Panda from your site.