Hi there. My name is Dave and over the next few months I shall be bringing my own geeky style of SEO to you, the fine readership of Search Engine Watch. For those of you not familiar with this wandering web Gypsy, my thing is looking at SEO from an IR perspective.

To get things rolling I thought I’d get into one of the more important areas affecting SEOs in the modern age, personalization.

Search Personalization 2011

We can break that down into three core areas:

- Traditional personalization (search/surfing history)

- Geo-localized

- Social

To get a sense of areas of personalization, a list of some forms of personalization:

- Geographic

- Localized TLD

- IP address

- Query analysis

- Technical

- Browser

- OS capabilities

- Cookies?

- Toolbar?

- Time based

- Time of day

- Time of year

- Historical data

- Behavioral

- Query history

- SERP interaction

- Selection and bounce rates

- Interactions with advertising

- Surfing habits (frequent surfer – fresher results)

- Bookmarks (and sharing)

- Reader (and sharing)

- Voting/SERP manipulation/sharing tools

While Bing has been touting personalization more over the last while, we’ll look at Google here today as they’ve long been active in this area.

Traditional Search Personalization

First off I wanted to get into the personalized search aspects what most people call “behavioral data” is what is known in IR terms as implicit and explicit user feedback. Important to know because I tend to throw those terms around a bunch and it’s always handy to speak in IR terms… or at least when I’m around (rofl) explicit signals are when a user takes an action… some of those include;

- Adding to favorites

- Voting

- Printing of page

- Emailing a page to a friend (from site)

These are considered the holy grail of user feedback, but often very hard to get because people generally aren’t interested in taking active involvement with the search engine. Searchers just want to find what they’re after and get on with it (y’all likely remember the infamous and now defunct Search Wiki where you’d vote up or down a listing in the SERP… as with most attempts by Google, people simply weren’t using it enough to glean enough actionable data).

Given that this type of data isn’t readily available IR folks they have to also look at the implicit user feedback. This type of data is more about trying to establish valuations from people’s actions — implicit ones. Some of these can include:

- Query history (search history)

- SERP interaction (revisions, selections and bounce rates)

- User document behavior (time on page/site, scrolling behavior);

- Surfing habits (frequency and time of day)

- Interactions with advertising

- Demographic and geographic

- Data from different application (application focus — IM, email, reader);

- Closing a window.

While these might seem logical at first glance, over the years IR folks have been divided as to what the actual value of these may be — what is often called ‘noise’, (a.k.a. noise to signal ratio). Some of the problems with these traditionally include:

- You save the link for later and continue to search

- You found what you needed on the page and went looking for more information

- You walk away from my browser and leave the window on a page for an hour

- Multiple users in your home during a given session

- Open a listing in a new window (when further tracking is unavailable)

- You found the information in a SERP snippet and selected nothing

- You were unsatisfied with the page selected and dug three pages deeper (unsatisfied, not engaged)

- Queries from automated tools (like a rank checker), which adds noise to overall data

- SERP bias — do people simply click the top x results regardless of relevance?

- Different users having different understanding of the relevance of a document (result)

Another problem with these can be the processing required to actually implement. For Google the search quality team has to balance performance (and speed of delivery) with the actual improvements in search quality (not to mention the bottom line when processing).

Since Caffeine in 2010, we can surmise that they may be getting closer to a point where they can use these signals more (and police potential abuse). Google has said that it is ‘spammy and noisy’ and to what degree they use these today, it’s hard to say. For more on some of the issues with bounce rates and implicit feedback, there are a ton of papers at the end of this post.

Another interesting part of personalization in search, for Google at least, is what is known as ‘Personalized PageRank‘. From 2003 and among other things, generally credited with the rise of anchor text valuations. It is also known in IR terms as ‘user sensitive PageRank’.

Keep in mind Bing (and once upon a time Yahoo) use PageRank — it’s not just a Google thang anyway. Back in 2003 there was this company called Kaltix run by a guy named Sep Kamvar whom even sat in Larry Page’s cubical at Stanford

In the press release at the time, they (Google) spoke of Sep with:

”(He’s been) working on a number of compelling search technologies, and Google is the ideal vehicle for the continued development of these advancements”– of particular interest was that they were ‘developing personalized and context-sensitive search technologies’ – Google Press Release

”Kaltix Corp. was formed in June 2003 and focuses on developing personalized and context-sensitive search technologies that make it faster and easier for people to find information on the web.”

It was a way of essentially speeding up PageRank calculations by segmenting user types, of note:

- Topic Sensitive PageRank: While not a direct personalization, it can be used to adapt rankings based on query topics and context. It would be calculated ahead of time and adapted at the time of a search using context elements of a given query.

- Modular PageRank: This aspect essentially restricts the random walk to more authoritative/trusted documents. So in a roundabout way, not so random a journey ultimately.

- BlockRank: These calculations are done on segments such as host domains. Using probabilistic calculations to limit the scope and provide a further granular level. For more on that see; Exploiting the Block Structure of the Web for Computing PageRank (PDF)

When ol’Sep was in Stanford, he worked on the PageRank project, and one of the bits from there reads;

”Ideally, each user should be able to define his own notion of importance for each individual query. While in principle a personalized version of the PageRank algorithm can achieve this task, its naive implementation requires computing resources far beyond the realm of feasibility. In the past couple of years, we have developed algorithms and techniques towards the goal of scalable, online personalized web search. Our focus is on the efficient computation of personalized variants of PageRank.” – from the Stanford PageRank Project

Once again, one of the problems with early personalization was resources things have changed a lot since then though with infrastructure updates such as Big Daddy and Caffeine

So, one has to ask, what can be done (ROI considered) to affect such types of signals? Given what we know, some considerations can include:

- Demographics

- Relevance Profile

- Keyword Targeting/Phrase Strategies.

- Quality Content

- Search Result Conversion

- Freshness

- Print Page/bookmark me/social

- Site Usability

- Analytics

Really, unless you’re a brain dead spamming SEO, we should be chasing most of those anyway. Engagement is the name of the game (SERP out). And that’s the good news. If you’re creating engaging sites/content then by and large you should already be in a position to take advantage of a lot of the factors that come into play with traditional (behavioral) personalization.

Geo-localized Personalization

While the above is the more traditional approach to personalization, we can’t forget some of the others including localized results. As most of you know this is certainly something that has become more and more prevalent in the world of search marketing over the last few years.

When search engines look towards targeting for localizations some of the elements include:

Start up:

- Identify primary target market (country region)

- Establish secondary targets (neighbor countries, regions, secondary markets

- Get to know the demographic (searching habits, intent)

Language:

- Primary language – what is the main language in the region? (chart)

- Secondary languages – what other acceptable languages are known to the region? (chart)

- Language nuances – are there dialects? Are there any spelling/grammatical subtleties?

Website/page:

- Is the domain/page URL targeted?

- Registrar data – is the business location information targeted?

- IP of web server – is it hosted locally?

- On page triggers (language, keywords, meta data, images etc..) are they present?

- Other web pages – is there location information on the site (eg Contact pages)

Links

- Location information of links – are there a high percentage of regional links pointing to the page?

- The text near the hyperlinks are the inbound links on relevant pages?

- Links pointing out are there regional links pointing out from the page?

So, when looking at targeting for local, be sure to use that as a bit of a check list. While I am often leery of group ranking factor studies, here’s David Mihm’s for those interested.

Now, once again, I am not going to get into geolocalized SEO today, that’s likely an entire session on its own, but the main point is that it is certainly a form of personalization in modern search and worth looking at.

Social Personalization

This is obviously another form of personalization that is on the rise and for fun, if you haven’t looked at Google’s social graph API, it’s always a fun one:

”The Social Graph API makes information about the public connections between people on the Web easily available and useful for developers. Developers can query this public information to offer their users dramatically streamlined “add friends” functionality and other useful features.”

There are also a few example apps there including these:

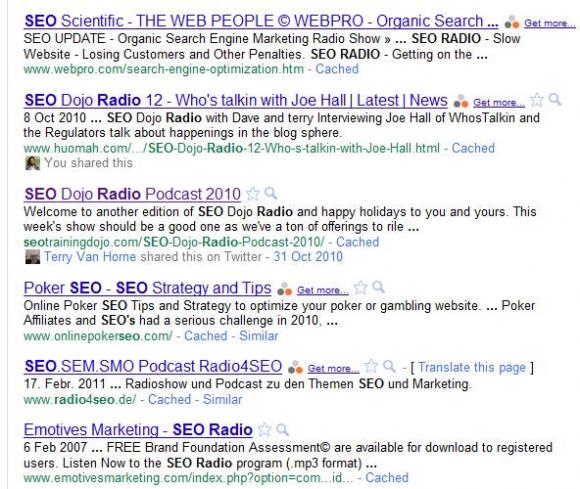

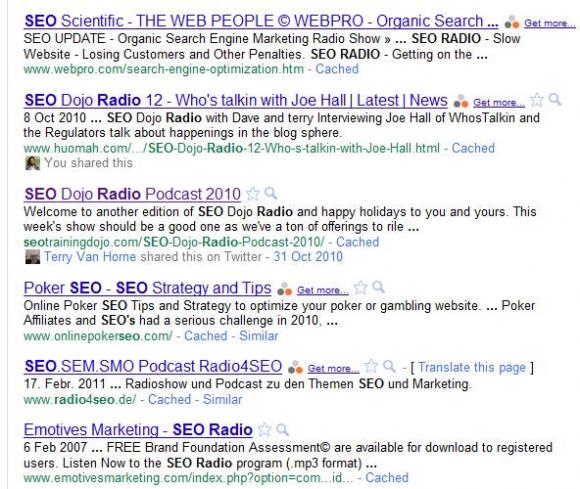

The social annotations themselves started with the update in February of this year, often missed because of the Panda maulings that started some three weeks later . The interesting part about this (social annotations) is that unlike +1 (as far as we know) they actually re-rank the SERP (for logged in users).

Logged out:

Logged in:

We’re not sure how much social annotations are playing today as a ranking factor, but we can consider it as part of the SEO landscape and for today’s purposes, establishing it as a form of personalization. In fact, much like the local universal in the SERPs, the social annotations can actually act as a driver to click through rates due to the visual element and social draw of them.

Understanding Personalization in Modern SEO

And so there we have it… my first offering here on SEW. The goal wasn’t as much about teaching revolutionary SEO tactics today. It is about getting a deeper understanding of search, information retrieval, and the SEO landscape.

SEO ain’t dead. SEO ain’t dying. Why? Because search isn’t. As the engines evolve we need to find ways to evolve with them. This is what I like to call “future proofing.” If you know your history and have an intimate understanding of the present, you will most certainly be positioned well for the future.

One doesn’t have to look far (*cough*Panda*cough*) to see what happens when you do the bare minimum and aren’t prepared for the future. I look forward to getting geeky here with you again real soon.

‘Til then… play safe.