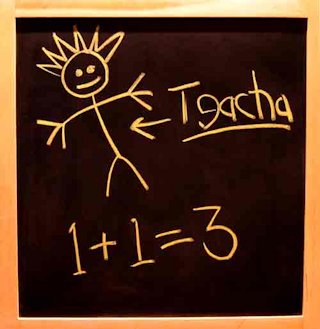

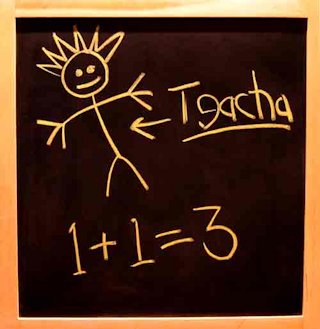

We’ve all heard the terms “machine learning” and “learning algorithms”, but how much do you really understand about them? Do you understand what they’re capable of learning and how they go about it? If not, it’ll be helpful to any SEO or content writer to understand… because they’re the ones that’ll be doing a lot of the teaching.

We’ve all heard the terms “machine learning” and “learning algorithms”, but how much do you really understand about them? Do you understand what they’re capable of learning and how they go about it? If not, it’ll be helpful to any SEO or content writer to understand… because they’re the ones that’ll be doing a lot of the teaching.

First, it’s important to remember that an algorithm is simply a mathematical process. Don’t expect to see true artificial intelligence emerge anytime soon. However, a mathematical algorithm can detect patterns and those patterns can be analyzed for probabilities. That, you’ve been seeing online for some time. Think patterns.

Types of Machine Learning Processes

Depending upon what the programmer is focusing upon, different approaches can be taken. Even in search, there can be different focuses.

The most common approaches are statistical reasoning and inductive reasoning. (Before anyone goes ballistic, I realize that “reasoning” is considered inaccurate for machines. But in this context, it’s the common terminology.)

Statistical Reasoning

Statistical reasoning simply gathers data and analyzes the probabilities of future occurrences following prior observed results.

For example, if an algorithm observes that in 80 percent of observed instances, ravens are black, it will extrapolate from that the probability that 80 percent of future observed ravens will also be black.

Inductive Reasoning

Inductive reasoning is somewhat similar, in that it also involves extrapolating probabilities, but it’s geared toward proving or disproving a specific theory. For example, if the theory is that a startled dog will snap at a threat, it will gather results from tests and either corroborate or discredit that theory, based upon the preponderance of observed results.

Neither statistical nor inductive reasoning models allow for the inclusion of random results, until they represent a significant percentage of the observed results. At that point, the patterns they define can become a factor in a statistical model, whereas in an inductive model, they will only effect findings indirectly.

How This Plays Into Search Engine Algorithms

Suppose, for instance, that a given algorithm is designed to determine how valid the SERPs are to a query. It might look at a factor such as bounce rate as an indicator of validity. If users don’t break the bounce threshold, the result could be deemed relevant to the query, indicating that the ranking algorithm was accurate. That would be utilizing statistical reasoning.

On the other hand, an algorithm designed to detect purchased links could look at such things as other pages which are linked to from a source page, where those other destination pages have been found to be buying links, which could establish some level of probability that your page had also purchased a link from that site. It would then examine other signals which would corroborate that probability or discredit it.

Do we know whether any of the search engines actually use these models in those scenarios? No, of course not. It’s just one of many possibilities. What is more important in our context is, what might a machine learning algorithm be able to learn, in either case?

The statistical model is fairly obvious. Once the algorithm decides that 80 percent of ravens are likely to be black, it can simply weight its predictions accordingly; a purely probabilistic weighting, based upon statistics.

In inductive models, the process is a little more subtle. Typically, there would be a number of other signals, each with their own weighting, which can vary, depending upon their prevalence. That implies a very non-linear probability curve which may be considerably more complex.

In the example above, such things as how many other target sites linked to from the source page are suspected of buying links, the probability weighting of those suspicions, the past history of all sites involved in the analysis and a host of other signals. Combined in a mathematical formula, a probability factor can be arrived at which could put the site over a preset threshold, triggering a dampening or a penalty.

Machine Learning Applied to Search Queries and Ranking

So what are the things that algos can and can’t learn? As mentioned, artificial intelligence is still a distant dream. Sentiment analysis, however, although scoffed at by many, isn’t entirely impossible, in my opinion.

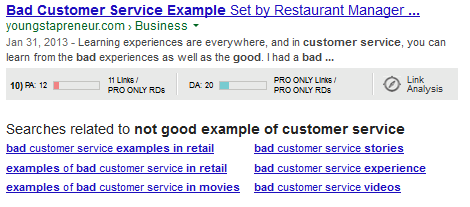

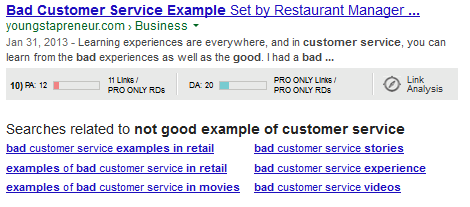

For instance, a simple determination of whether a query or an on-page phrase is negative or positive can often be reached. More complex characteristics like sarcasm, irony or humor, however, are still largely beyond a machine’s comprehension. But a search query for [bad example of customer service] will render first page results with the terms “bad”, “poor” and “worst” in the content, title and/or URL.

This may seem to be a simple instance of recognizing synonyms for the search query, but remember that synonym recognition was just one of the early baby steps in search. Looking at a subtly different search query of [not good example of customer service] yields such pages as:

So “not good” is equated with “bad” by the algorithm. A baby step, perhaps, but a step, nonetheless. Rest assured that Google didn’t manually enter all such possible relationships – there are far too many. This is much better accomplished by developing an algorithm that will constantly adjust its lexicon as it detects patterns.

Those patterns aren’t limited to just positive vs. negative modifiers. They can also be seen with step modifiers, like “big”, “bigger”, “biggest” or “new”, “older”, “oldest”. Even “past”, “present” and “future” can be seen in the SERPs in an interesting fashion, with some imaginative queries.

Machine Learning & Your Content

The algorithms learn from the patterns they detect, both in queries and documents, as well as the relationships they discover between them. That’s why writing content using a broader selection of terms (including adjectives and adverbs, not just nouns and verbs) has a dual effect:

- It provides new syntax in a specific context, which can aid the algorithms’ learning process.

- It also enables you to write content that is more conceptual – directed to the reader, rather than to the search engines.

One end result is a more rapid development of complex understanding by machines – the heart of semantics. It also helps you provide content that is more informative, entertaining, and engaging for your readers. I call that a win-win.

We’ve all heard the terms “machine learning” and “learning algorithms”, but how much do you really understand about them? Do you understand what they’re capable of learning and how they go about it? If not, it’ll be helpful to any SEO or

We’ve all heard the terms “machine learning” and “learning algorithms”, but how much do you really understand about them? Do you understand what they’re capable of learning and how they go about it? If not, it’ll be helpful to any SEO or