If we assume, as the joke goes, that the best place to hide a dead body is on page two of Google’s search results, we’ve set the basis that Google has a lot of click data to play around with on page one but much less after that point.

And all CTR studies (that I’m aware of) show the overwhelming majority of clicks on organic listings landing on positions 1-10 (or 1-11).

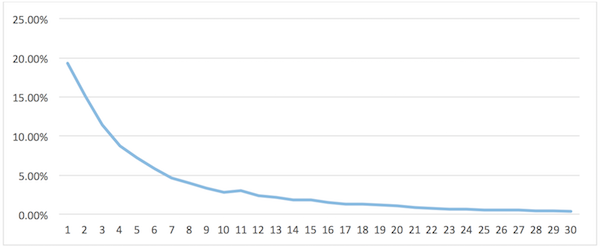

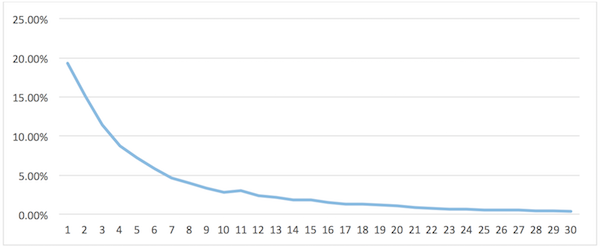

Desktop CTR according to the recent NetBooster study.

If we track enough keyword search terms we can begin to build a picture of where traffic is likely to arrive at by applying a CTR model such as the one above.

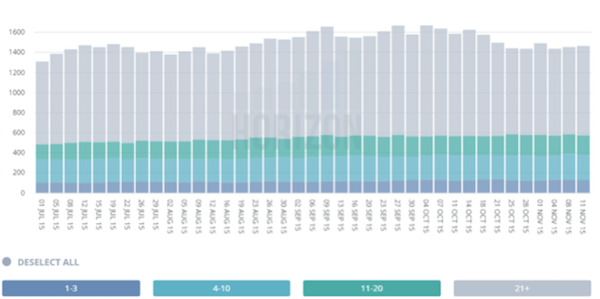

However, taking CTR out of the equation for a second and focusing just on the keyword rankings (I can already hear an angry mob assembling at the suggestion) we can see how those rankings fluctuate over time – which will obviously affect our organic traffic.

Where those fluctuations seem to occur has some interesting implications with what drives those rankings in the first place: most fluctuations occur after page three.

Some quick thoughts on the graph above:

- Most of the keywords that the site ranks for are on outside of the top two pages, so naturally there is more fluctuation in these positions because there are more keywords in these positions

- This graph alone doesn’t help us to understand which keywords are ranking where (though obviously we can do that) so there’s always a chance that the keywords ranking in position 1-3 on any given day are not the same keywords that rank in those positions next time we check (but where the first point was intended to show an understanding of the odds, this one seems like a bit of a long shot)

- Not every graph looks like this – but many do. It’s possible to start noticing trends in certain industries/SERPs too.

The most significant consideration is that the grey bars don’t really show the number of keywords ranking on a certain page but the number of keywords ranking at all. The site above becomes irrelevant for around 500 queries every couple of days and suddenly becomes relevant again a few days later – which happens consistently.

Why might this be happening?

Fluctuation in some SERPs is very pronounced, due to QDF (query deserves freshness). For example, a user searching for the name of a sports team is going to want to find the team’s official site, but also the latest scores, fixtures, news and gossip. As a result, Google will ‘freshen’ its results much more frequently, if not in real time.

However I chose the website above as an example because it operates in the travel space, where results are often less fresh. So let’s choose another example:

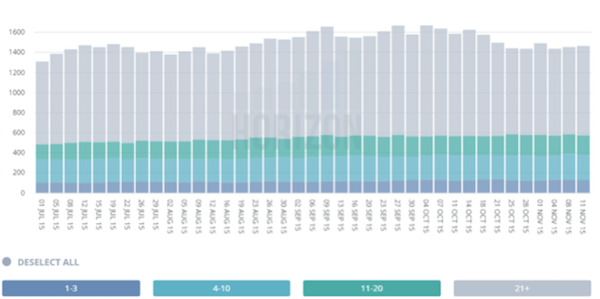

The graph above shows an ecommerce retailer – arguably the biggest ecommerce store in the UK in this particular space (tweet me the answer and I might give you a prize). The fluctuations take much longer but are still wholly consistent. The site is affected by seasonality (more stock in around Christmas, for instance, and a nice injection of inbound links when this year’s Christmas must-haves are announced) but not really affected by freshness.

It might be argued that strong rankings on some keywords are a result of the site being well-optimised for lucrative keywords and largely ignored for keywords that won’t drive revenue (which is fair enough), but the content doesn’t change consistently on all those other pages or on the pages of any other large site in the industry.

And on the same subject, assuming SEO practitioners are responsible for the site ranking well for certain keywords, we would have to assume that all sites in the SERPs (multiple SERPs – the graphs feature many hundreds of keywords on the first couple of pages) are acquiring links at almost exactly the same rate, or that links are hardly a factor at all.

Here’s what we think: the fluctuations are because the ranking factors are so different after page two compared to the top 10 or 20 results. Other factors take more precedence – PageRank, for instance – after this point.

On the first page or two, Google has enough click data to make a robust ranking algorithm. After this point they don’t have the data they need.

Which keywords are we looking at?

For the graphs to be generated we must input keywords manually. The keywords that we’re visualising are exclusively keywords we want to see: no brand terms and no junk terms from slightly off-topic blog posts. As a result we’re able to get a better picture of an industry rather than just a picture of a site’s overall visibility for every keyword it ranks for, accidentally or otherwise.

When (not provided) made attributing search traffic to specific keywords impossible in analytics it became necessary to track keyword rankings both more frequently and in larger numbers.

Where once a client’s SEO report may have contained the hundred or so keywords we cared about most we now track thousands of keywords for the businesses we work with. If we experience a fluctuation in traffic we need to know where we have potentially lost or gained visitors.

Though we’re reporting on far more keywords now than we ever have before this obviously doesn’t change what a client does or does not rank for. The advantages are that…

- We have a more comprehensive list of opportunities – keywords that are relevant but do not perform as well as possible (bottom of page 1, top of page 2 etc.) are obvious focus points that enable us to increase relevant traffic through changing meta data or content, for example

- We are able to ‘bucket’ or group large numbers of keywords together and estimate traffic for a particular service we offer or location we operate in

- We can make an informed decision about whether a single page is relevant for too many terms and therefore we should look to create a separate page or subpage, or when two pages are competing for the same search rankings and should be combined to provide a better user experience

The obvious disadvantage is that we have moved from a model where we may track 100 traffic driving keywords to a model where we report on those 100 keywords as well as potentially 900 more that do not perform as strongly or drive as much traffic, which we would largely say is a waste of time for a client to report to their superiors.

For this reason we developed a dashboard that allows us to present this information in a meaningful way, allowing clients to filter the information however they want, without removing the granularity we need to be able to monitor.

Why does this matter?

The difference between positions one and two for a competitive keyword is down to number and quality of links, right? Site B just needs more, better links to overtake site A? Wrong. This hasn’t been the case for a long time. We’ve been saying for years that if you’ve got enough links to rank fifth then you’ve got enough links to rank first.

The implications are also interesting across different industries, which we’re looking into and I’ll follow up with another blog post soon. It looks to us like Google is turning the prevalence of PageRank right down in SERPs it considers ‘spammy. In gambling, for example, CTR is a much stronger ranking signal than in other sectors: sites with better sign-up bonuses etc. get more clicks and as a consequence tend to rank quite well. From a search engine perspective it makes no sense to treat all sites equally when nine of the top 10 are buying poor quality links, en mass.

Former Google Engineer (officially current Google Engineer) Matt Cutts stated in a Webmaster video in May 2011 that in SERPs such as porn, where people don’t link (naturally), Google largely ignores the link graph and focuses on other metrics to provide ranking data. We don’t have any ranking data for porn sites because nobody has ever paid us to do that – so if you’re listening PornHub, you know where to find me (please use my Twitter handle, not my IP address).

Do you think something else is at play here? Search Engine Watch would love to hear your opinion in the comments.