Nine site audit issues we always see and tips to tackle them

Site audit issues and technical changes can be tricky and incredibly time-consuming to spot and implement. Nine stand out issues and tips to tackle.

Site audit issues and technical changes can be tricky and incredibly time-consuming to spot and implement. Nine stand out issues and tips to tackle.

After carrying out thousands of site audit activities across varying industries and site sizes, there are some stand out issues that are repeated over and over again.

Certain CMS platforms have their downfalls and cause the same technical issues repeatedly but most of the time these issues are caused by the sites being managed by multiple people, knowledge gaps, and simply the factor of time.

We tend to use two crawlers at Zazzle Media which will be mentioned throughout this post. The first being Screaming Frog, which we make use of when we need raw exports or need to be very specific with what we are crawling. The second being Sitebulb, which is much more of a site audit tool, rather than a crawler. We tend to make use of Sitebulb more due to being able to manage projects and the overall progress a site is making.

So let’s get started, with the issues we see time and time again.

One of the more simple issues, but something that can be missed, if you aren’t looking out for it. Broken links can disrupt the user journey, and for the search engines, this disables crawl bots from connecting pieces of content.

Internal links are mainly utilized to connect pieces of content, and in terms of Google’s algorithm, internal links allow link equity to be distributed from one page to another. A broken link can disrupt this as if the link is broken causing failure of equity transfer from one page to another. In terms of PageRank, Google’s algorithm evaluates the number of high-quality links to a page in order to determine page authority.

Put simply, a broken internal link can negatively affect page authority and stop the flow of link equity.

The scale of this issue will vary dramatically depending on the type of site you are running. However, on most sites there will be some form of broken links.

A simple crawl will pick these up, running a tool such as Screaming Frog with a basic configuration will provide a full list of broken links, alongside the parent URL.

Based on the number of times this occurs, it can be a very minimal problem or something that could dramatically alter a whole business.

Short meta titles could indicate a lack of targeting while long titles would cause truncation and in turn, lower click-through rates.

To write the perfect meta title and descriptions which maximize pixel usage and CTA, we recommend using the Sistrix SERP generator tool.

Redirecting internal links can cause problems for your site architecture as it takes slightly longer for users and search engines to find content. With content changing or products becoming sold out, either a permanent (301) or temporary (302) redirection is used. A 302 redirection tells a search engine to keep the old page, as the 302 redirection is simply a temporary measure. A 301 redirection instructs the search engine that the page has permanently moved, and will be replaced at the new location.

Redirection loops are when your browser tells the search engine to redirect to a page, which once again tells your browser to redirect to another page – which can happen over and over again until it hits the final destination. Redirection loops should be avoided at all costs, as this will increase crawl time and can send mixed signals to search bots.

The problem isn’t with redirecting a URL (if completed correctly), the issue lies within the links pointing to the URL redirection. For example, URL A redirects to a new URL B. But URL C still points to URL A – which is incorrect.

Sitebulb can crawl and find all the URLs that currently link to the redirecting URL, where you can then change the href target to point to the new URL via the CMS.

Redirecting URLs should be avoided where possible, as this can increase a search bots crawl time, in turn, potentially leading to the website’s URL being skipped within the allocated crawl.

XML sitemaps do not have to be static, as with larger websites to continuously update the XML file directory will be very time-consuming. It is recommended to use a dynamic .xml sitemap, as this ensures every time a piece of content, or media is added, your CMS automatically updates this file directory. A Sitebulb audit will highlight that your website has a missing sitemap.

It is really important to use Dynamic XML sitemaps correctly, as in some cases, the dynamic sitemap can end up adding URLs you do not want in the sitemap

If you are using a standard CMS such as WordPress search/sitemap.xml to the end of your domain, this should show your website’s sitemap.

Orphan pages, otherwise known as “floating pages” are URLs that are indexed and published but can neither be found by users nor search engines by following internal links. This means that an orphan page can end up never being crawled. A typical scenario of an orphan page could be a winter sale, where the page was once needed, but now due to the season isn’t needed anymore.

Essentially, when there are a few this is not harmful, however, when there is a large amount this it can bloat your website. The result, poor link equity distribution, keyword cannibalization (for which we have a separate guide here) and a poor internal linking experience for both search bot and user.

As this is a specific type of crawl, Zazzle Media uses Screaming Frog to crawl the sitemap data. At the same time, we run another crawl with either Screaming Frog or Sitebulb to find the orphan pages by comparing the two data sets.

Read our quick guide that concerns orphan URLs and how to deal with them for a more in-depth approach.

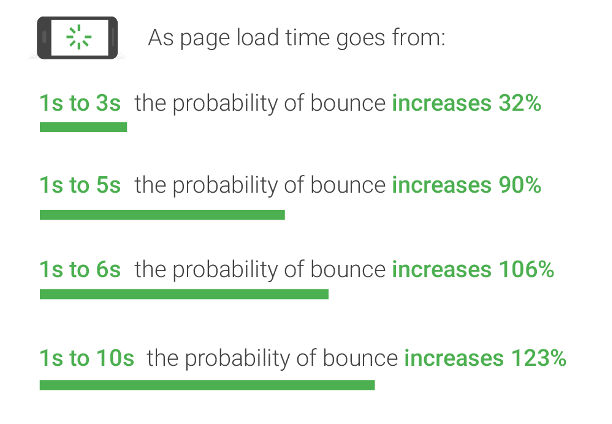

Google has previously indicated that site speed is a crucial ranking factor, and more specifically is a part of its ranking algorithm for search engine results. This is because site speed is closely related to good user experience, slow websites have high bounce rates due to content taking a long time to load. A benefit from improving your websites site speed is that it will better the user experience, but also could reduce website bounce rate too.

Source: Search Influence, 2017

Additionally, as site speed is directly related to lowering bounce rate, this should in turn boost revenues – as users are actively remaining engaged on your website for longer.

To check your website’s site speed, we recommend using Google’s very own page speed insights tool, where this will not only give you a page speed score, but also a host of recommendations on how to best improve your site speed and how you compare to search competition!

A website’s Hierarchy structure, otherwise known as information architecture, is essentially how your website’s navigation is presented to a search engine or user. The fundamental issue that most websites suffer from is page rank distribution.

Websites’ main pages or most profitable pages should be within three clicks from the homepage. Pages that are more than three clicks away from the homepage, subsequently receive less page rank distribution, and in other scenarios will only occasionally be crawled (if ever).

Without an effective hierarchy, crawl budget can be wasted. This can mean for pages within the depths of your website (more than three clicks away from the root) could rank poorly as Google is unsure of the importance of the page and link equity could be spread thinly.

An SEO and user-friendly site architecture is all about allowing search bots and users to seamlessly navigate your website. Flattening your site architecture can increase indexation, allow more keyword rankings, and in turn boost organic traffic.

Internal linking is an important feature of a website as this allows users to navigate your website, and most importantly (from an SEO perspective) allows search engine crawlers to understand the connections between content. An effective internal linking strategy could have a big impact on rankings.

It is no surprise to us when a Sitebulb audit states to review your internal linking strategy, as complex sites, with thousands of pages can get messy. A typical example of a messy internal linking structure could be anchor texts that do not contain a keyword, URL linking inconsistencies in volume (for PageRank distribution), and links not always pointing to the canonical version of a URL. Issues such as the ones listed can create mixed signals for search engine crawlers and ultimately confuses a crawler when it comes to indexing your content.

Sitebulb can complete an audit where this highlights any issues with link distribution, shows which pages receive the most internal links, shows any broken internal links / incorrectly used and so much more. We then digest this data to devise a strategy of how we can best optimize your website’s internal linking strategy.

Writing unique pieces of content that provides value to a user can be incredibly challenging, and most importantly time-consuming! Hence, this is one of the most frequent issues we always see on website audits. More specifically, thin content is directly against Google’s guidelines and can result in a penalty worst-case scenario.

Search engines when crawling your website are looking for functional pieces of content to understand your business services and product offerings. Not only are search engines looking for functional pieces of content, but search bots also want to see your expertise, quality, and trust. Google has a huge 166 page ‘Search Quality Guidelines‘ document that explains what search quality constitutes. We recommend familiarizing yourself with this document to ensure that you write quality content for your website which is in line with Google’s search guidelines.

This is a regular issue that many websites overlook, but is a critical route to organic success.

A Sitebulb audit will identify any URLs with thin content, and prioritize the severity of the issue. Aim for about 350 – 500 words per page to succinctly communicate your information. However, the quality of this content is still a very important factor.

These are just some of the most common types of issues discovered from an SEO audit, and technical changes can be tricky as well as incredibly time-consuming to implement at times. Completing a technical audit of your website, and correcting any issues can lead to improving keyword rankings, organic traffic, and if the products/services are right, achieve more sales.

The sky’s the limit when it comes to search engine optimization and with the landscape constantly changing, this is a superior strategy to achieve long term competitive advantage in the digital landscape.