Unless you’ve had your head under a rock you’ve undoubtedly heard the rumblings of a coming Google Penguin update of significant proportions.

Unless you’ve had your head under a rock you’ve undoubtedly heard the rumblings of a coming Google Penguin update of significant proportions.

To paraphrase Google’s web spam lead Matt Cutts the algorithm filter has “iterated” to date but there will be a “next generation” coming that will have a major impact on SERPs.

Having watched the initial rollout take many by surprise it make sense this time to at least attempt to prepare for what may be lurking around the corner.

Google Penguin: What We Know So Far

We know that Penguin is purely a link quality filter that sits on top of the core algorithm, runs sporadically (the last official update was in October 2012), and is designed to take out sites that use manipulative techniques to improve search visibility.

And while there have been many examples of this being badly executed, with lots of site owners and SEO professionals complaining of injustice, it is clear that web spam engineers have collected a lot of information over recent months and have improved results in many verticals.

That means Google’s team is now on top of the existing data pile and testing output and as a result they are hungry for a major structural change to the way the filter works once again.

We also know that months of manual resubmissions and disavows have helped the Silicon Valley giant collect an unprecedented amount of data about the “bad neighborhoods” of links that had powered rankings until very recently, for thousands of high profile sites.

They have even been involved in specific and high profile web spam actions against sites like Interflora, working closely with internal teams to understand where links came from and watch closely as they were removed.

In short, Google’s new data pot makes most big data projects look like a school register! All the signs therefore point towards something much more intelligent and all encompassing.

The question is how can you profile your links and understand the probability of being impacted as a result when Penguin hits within the next few weeks or months?

Let’s look at several evidence-based theories.

The Link Graph – Bad Neighborhoods

Google knows a lot about what bad links look like now. They know where a lot of them live and they also understand their DNA.

And once they start looking it becomes pretty easy to spot the links muddying the waters.

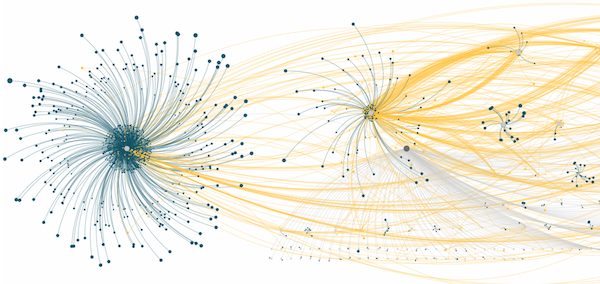

The link graph is a kind of network graph and is made up of a series of “nodes” or clusters. Clusters form around IPs and as a result it becomes relatively easy to start to build a picture of ownership, or association. An illustrative example of this can be seen below:

Google assigns weight or authority to links using its own PageRank currency, but like any currency it is limited and that means that we all have to work hard to earn it from sites that have, over time, built up enough to go around.

This means that almost all sites that use “manipulative” authority to rank higher will be getting it from an area or areas of the link graph associated with other sites doing the same. PageRank isn’t limitless.

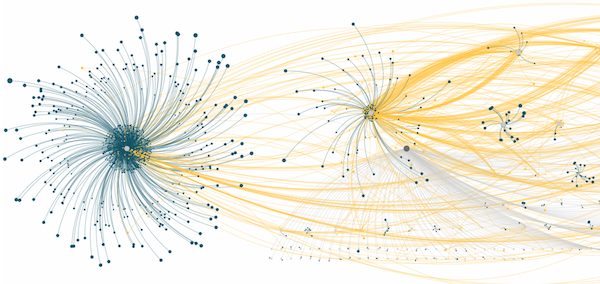

These “bad neighborhoods” can be “extracted” by Google, analyzed and dumped relatively easily to leave a graph that looks a little like this:

They won’t disappear, but Google will devalue them and remove them from the PageRank picture, rendering them useless.

Expect this process to accelerate now the search giant has so much data on “spammy links” and swathes of link profiles getting knocked out overnight.

The concern of course is that there will be collateral damage, but with any currency rebalancing, which is really what this process is, there will be winners and losers.

Link Velocity

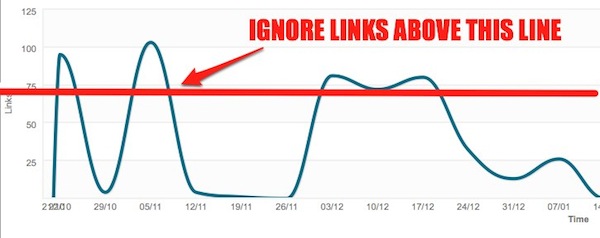

Another area of interest at present is the rate at which sites acquire links. In recent months there definitely has been a noticeable change in how new links are being treated. While this is very much theory my view is that Google have become very good now at spotting link velocity “spikes” and anything out of the ordinary is immediately devalued.

Whether this is indefinitely or limited by time (in the same way “sandbox” works) I am not sure but there are definite correlations between sites that earn links consistently and good ranking increases. Those that earn lots quickly do not get the same relative effect.

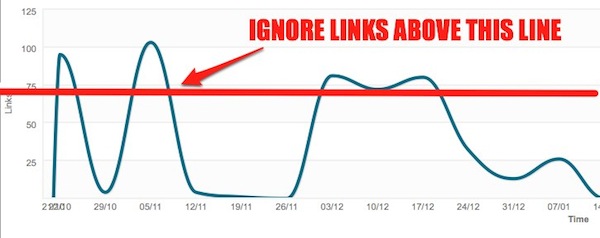

And it would be relatively straightforward to move into the Penguin model, if it isn’t there already. The chart below shows an example of a “bumpy” link acquisition profile and as in the example anything above the “normalized” line could be devalued.

Link Trust

The “trust” of a link is also something of interest to Google. Quality is one thing (how much juice the link carries), but trust is entirely another thing.

Majestic SEO has captured this reality best with the launch of its new Citation and Trust flow metrics to help identify untrusted links.

How is trust measured? In simple terms it is about good and bad neighborhoods again.

In my view Google uses its Hilltop algorithm, which identifies so-called “expert documents” (websites) across the web, which are seen as shining beacons of trust and delight! The closer your site is to those documents the better the neighborhood. It’s a little like living on the “right” road.

If your link profile contains a good proportion of links from trusted sites then that will act as a “shield” from future updates and allow some slack for other links that are less trustworthy.

Social Signals

Many SEO pros believe that social signals will play a more significant role in the next iteration of Penguin.

While social authority, as it is becoming known, makes a lot of sense in some markets, it also has limitations. Many verticals see little to no social interaction and without big pots of social data a system that qualifies link quality by the number of social shares across site or piece of content can’t work effectively.

In the digital marketing industry it would work like a dream but for others it is a non-starter, for now. Google+ is Google’s attempt to fill that void and by forcing as many people as possible to work logged in they are getting everyone closer to Plus and the handing over of that missing data.

In principle it is possible though that social sharing and other signals may well be used in a small way to qualify link quality.

Anchor Text

Most SEO professionals will point to anchor text as the key telltale metric when it comes to identifying spammy link profiles. The first Penguin rollout would undoubtedly have used this data to begin drilling down into link quality.

I asked a few prominent SEO professionals their opinions on what the key indicator of spam was in researching this post and almost all pointed to anchor text.

“When I look for spam the first place I look is around exact match anchor text from websites with a DA (domain authority) of 30 or less,” said Distilled’s John Doherty. “That’s where most of it is hiding.”

His thoughts were backed up by Zazzle’s own head of search Adam Mason.

“Undoubtedly low value websites linking back with commercial anchors will be under scrutiny and I also always look closely at link trust,” Mason said.

The key is the relationship between branded and non-branded anchor text. Any natural profile would be heavily led by branded (e.g., www.example.com/xxx.com) and “white noise” anchors (e.g., “click here”, “website”, etc).

The allowable percentage is tightening. A recent study by Portent found that the percentage of “allowable” spammy links has been reducing for months now, standing at around 80 percent pre-Penguin and 50 percent by the end of last year. The same is true of exact match anchor text ratios.

Expect this to tighten even more as Google’s understanding of what natural “looks like” improves.

Relevancy

One area that will certainly be under the microscope as Google looks to improve its semantic understanding is relevancy. As it builds up a picture of relevant associations that data can be used to assign more weight to relevant links. Penguin will certainly be targeting links with no relevance in future.

Traffic Metrics

While traffic metrics probably fall more under Panda than Penguin, the lines between the two are increasingly blurring to a point where the two will shortly become indistinguishable. Panda has already been subsumed into the core algorithm and Penguin will follow.

On that basis Google could well look at traffic metrics such as visits from links and the quality of those visits based on user data.

Takeaways

No one is in a position to be able to accurately predict what the next coming will look like but what we can be certain of is that Google will turn the knife a little more making link building in its former sense a more risky tactic than ever. As numerous posts have pointed out in recent months it is now about earning those links by contributing and adding value via content.

If I was asked what my money was on, I would say we will see a tightening of what is an allowable level of spam still further, some attempt to begin measuring link authority by the neighborhood it comes from and any associated social signals that come with it. The rate at which links are earned too will come under more scrutiny and that means you should think about:

- Understanding your link profile in much great detail. Tools and data from companies such as Majestic, Ahrefs, CognitiveSEO, and others will become more necessary to mitigate risk.

- Where you link comes from not just what level of apparent “quality” it has. Link trust is now a key metric.

- Increasing the use of brand and “white noise” anchor text to remove obvious exact and phrase match anchor text problems.

- Looking for sites that receive a lot of social sharing relative to your niche and build those relationships.

- Running back link checks on the site you get links from to ensure their equity isn’t coming from bad neighborhoods as that could pass to you.

Unless you’ve had your head under a rock you’ve undoubtedly heard the rumblings of a

Unless you’ve had your head under a rock you’ve undoubtedly heard the rumblings of a