We recently caught up with Clark Boyd, a visual search expert and regular contributor to Search Engine Watch. We discussed camera-based visual search – that futuristic technology that allows you to search the physical world with your smartphone – what it means for the way search is changing, and whether we’re going to see it become truly commonplace any time soon.

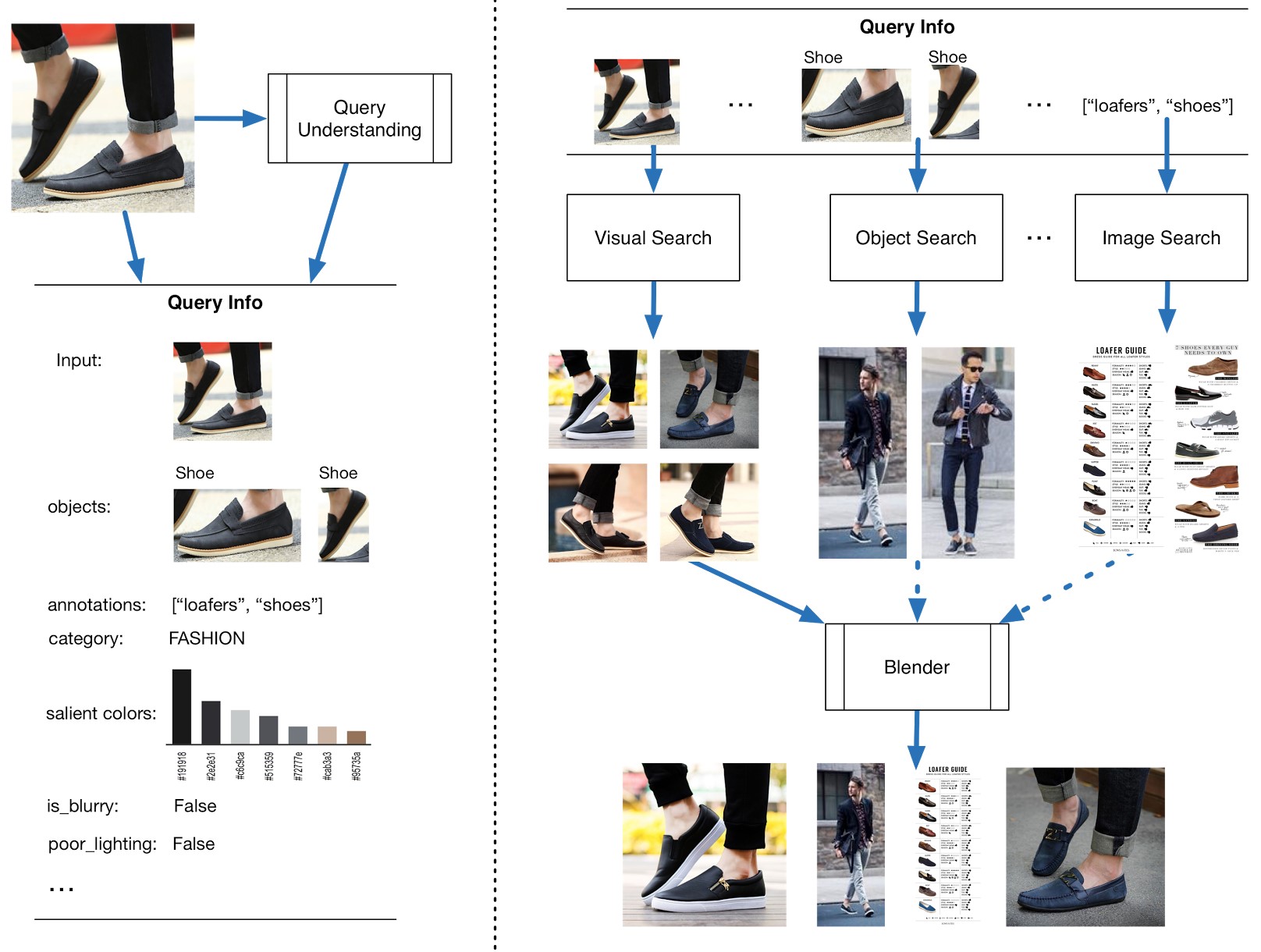

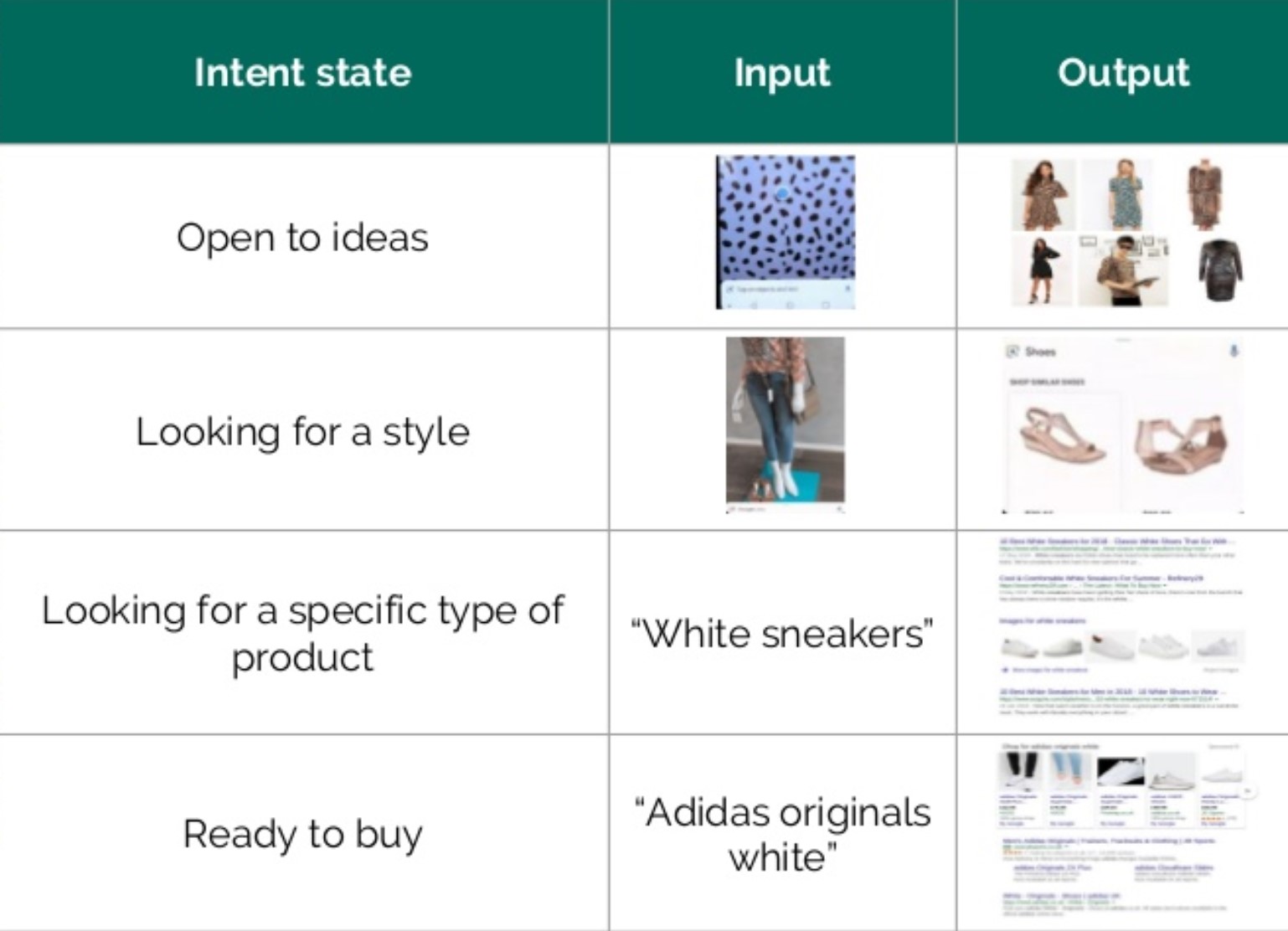

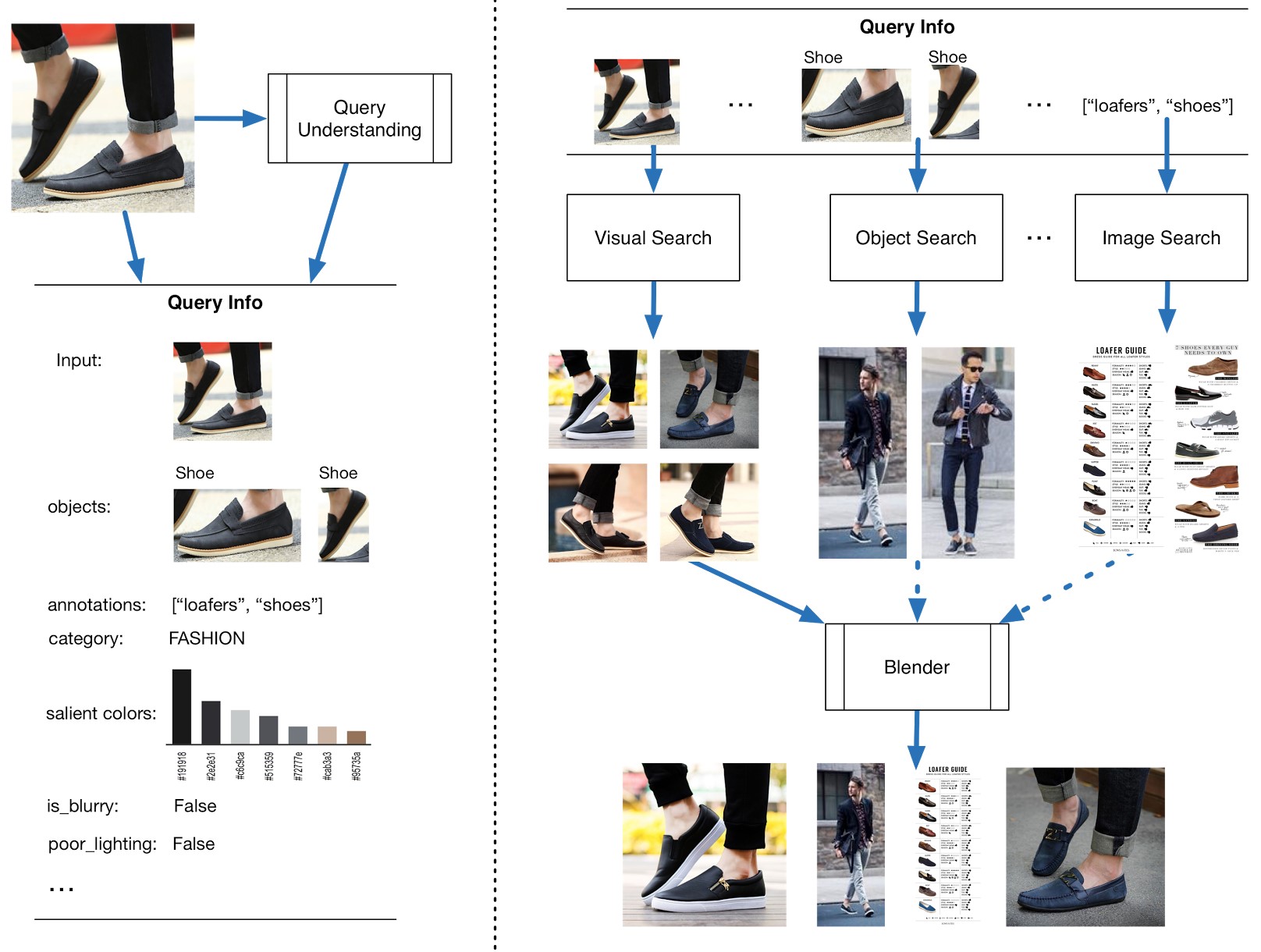

In case any of our readers aren’t up to speed on what ‘camera-based visual search’ actually is, we’re talking about technology like Google Lens and Pinterest Lens; you can point your smartphone camera at an object, the app will recognize it, and then perform a search for you based on what it identifies.

So you can point it at, for example, a pair of red shoes, the technology will recognize that these are red shoes, and it’ll pull up search results – such as shopping listings – for similar-looking pairs of shoes.

In other words, if you’ve ever been out and about and seen someone with a really cool piece of clothing that you wish you could buy for yourself – now you can.

First of all – what’s your personal take on camera-based visual search – the likes of Google Lens and Pinterest Lens? Do you use these technologies often?

I have used visual search on Google, Pinterest, and Amazon quite a lot. For those that haven’t used these yet, you can do so within the Google Lens app (now available on iOS), the Pinterest app, and the Amazon app too.

In essence, I can point my smartphone at an object and the app will interpret it based on what it sees, but also what it assumes I want to know.

With Google, that can mean additional information about landmarks pulled from the Knowledge Graph or it might show me Shopping links. On Pinterest it could show recipes if I look at some ingredients, or it can go deeper to look at the style of a piece of furniture, for example. Amazon is a bit more straightforward in that it will show me similar products.

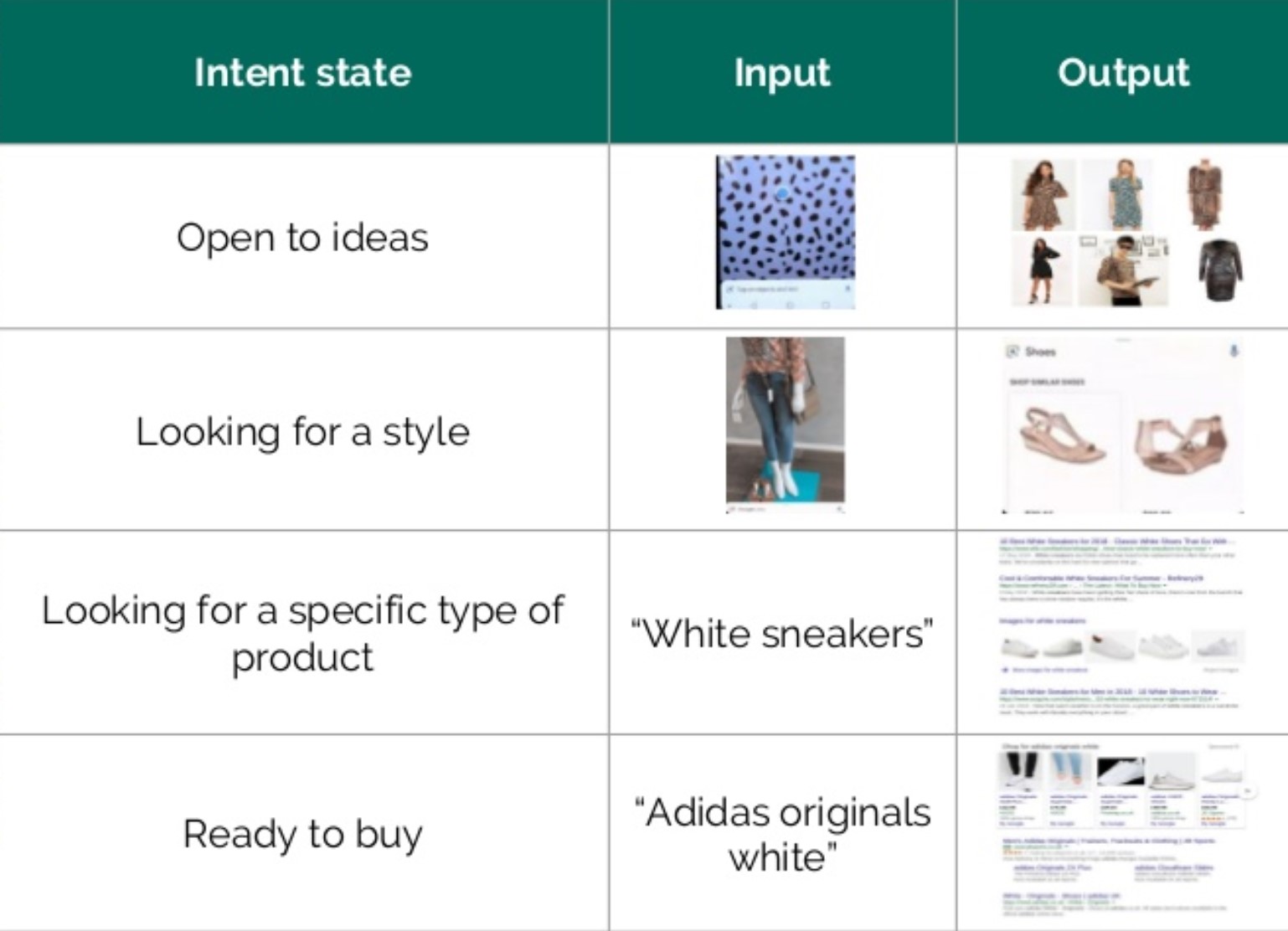

I suppose that visual search is best summarized by saying it’s there when we don’t have the words to describe what we want to know. That could be an item of clothing, or we could be looking for inspiration – we know what the item is, but we aren’t 100% sure what would go with it.

Recently, I have been both decorating a house and planning my wedding. As a colorblind luddite with more enthusiasm than taste, I can use visual search to help me plan. Simply typing a text search for [armchairs] is going to lead me nowhere; scanning a chair I like to find similar items and also complementary ones is genuinely useful for me.

At the moment, this works best on Pinterest. It uses contextual signals (Pins, boards, feedback from similar users) to pick up on the esthetic elements of an object, beyond just shape and color. Design patterns and texture are used to deliver nuanced and satisfactory items in response to a query.

What’s interesting is that camera-based searches on Pinterest deliver different results to text-based searches for similar items. Basically, visual search can often lead to better results on Pinterest. That’s not the case on Google yet, but that’s where they are aiming to get to.

And that’s the key, really: in some contexts, visual search adds value for the user. It’s easy to use and can lead to better results. There are now over 600,000,000 visual searches on Pinterest every month, so it seems people are really starting to engage with the technology.

To my mind, that is what will give visual search longevity. It mimics our thought process and augments it, too; visual search opens up a whole new repository of information for us.

Camera-based visual search has some fairly obvious applications in the realm of ecommerce, for example where you can see something while you’re on the go and instantly pull up search results showing you how to buy it. But do you think there are any other big potential uses for visual search?

I think there are lots of potential uses, yes; in fact, even the ecommerce example really only scratches the surface.

Where visual search comes into its own, and I think goes beyond the realm of the purely novel, is when it suggests new ideas that people have not yet thought of.

Pinterest’s Lens the Look tool is a great example. I could search for shoes and find the pair I wanted, but Pinterest can also suggest an outfit that would go with the shoes too. This then becomes more of an ongoing conversation.

The new app from fashion retailer ASOS will likely go in this direction too, and I expect sites like Zara and H&M to follow suit. IKEA has its AR-tinged effort too, which allows people to see how the furniture will look. Although in my experience, it will lie in a million pieces for days until I figure out how to put it all together!

We should always consider that visual search exists at a very clear intersection of the physical and the digital. As a result, we should also think about the ways in which we can make it easier for people to enhance their experience of our stores through visual search.

We have seen things like QR codes linger without ever really taking off here, and Pinterest has launched Pincodes as a way to try and get people to engage.

Google has started adding features like this to its Lens tool, and the recent announcement about voice-activated Shopping through Google Express is another step in that direction.

The core of this is really to get people on board first and foremost, and then to introduce more overt forms of ecommerce.

Beyond that, visual search can allow us to take better pictures. Google has demonstrated forthcoming versions of Lens that will automatically detect and remove obstructions from images, and input Wifi codes just by showing the camera the password.

What we’re really looking for are those intangibles that only an image can get close to capturing. So anything related to style or design, such as the visual arts or even tattoos (the most searched for ‘item’ on Pinterest visual search), will be a natural fit.

Search has been a fantastic medium when we want to locate a product or service. That input format limits its reach, however. If search is to continue expanding, it must become a more comprehensive resource, actively searching on our behalf before we provide explicit instruction.

We’ve seen a lot of development in the realm of visual search over the past couple of years, with tech companies like Google, Pinterest and Bing emerging as front-runners in the field. Google acquired an image recognition start-up, and Pinterest hired a new Head of Search and started more seriously developing its search capabilities. What do you think could be coming next for visual search?

First of all, the technology will keep improving in accuracy.

Acquisitions will likely be a part of this process. Pinterest’s early success can be put down to personnel and business strategy, but they also bought Kosei in 2015 to help understand and categorize images.

I would expect Google to put a lot of resource into integrating visual search with its other products, like Google Maps and Shopping. The recent I/O developers conference provided some tantalizing glimpses of where this will lead us.

Lens is already built into the Pixel 2 camera, which makes it much easier to access, but it still isn’t integrated with other products in a truly intuitive way. People are impressed when their smartphone can recognize objects, but that capability doesn’t really add long-term value.

So, we will see a more accurate interpretation of images and, therefore, more varied and useful results.

To go back to the example of my attempts to help furnish an apartment, I don’t think where we are today is by any means the fulfillment of visual search’s promise. I can certainly imagine a future where I can use visual search to scan the space in my living room, take into account the dimensions and act as my virtual interior designer, recommending designs that fit with my preferences and budget. AR technology would let me see how this will look before I buy and also save the image so I can come back to it.

The technologies to do that either exist or are getting to an acceptable level of accuracy. Combined, they could form a virtual interior design suite that either brands or search engines could use.

A gap still remains between the search engine and the content it serves, however.

For this to function, brands need to play their part too. There are plentiful best practices for optimizing for Pinterest search and all visual search engines make use of contextual signals and metadata to understand what they are looking at.

One way this could happen is when brands team up with influencers to showcase their products. As long as their full range is tied thematically to the products on show, these can be served to consumers as options for further ideas.

In summary, I think the technology has a bit of development still to come, but we need to meet the machine learning algorithms halfway by giving them the right data to work with. Pinterest has used over one billion images in its training set, for example. That means taking ownership of all online real estate and identifying opportunities for our content to surface through related results.

The advertising side of this will come, of course (and Pinterest is evolving its product all the time), but for this to come to fruition it also requires a shift in mindset from the advertisers themselves. The most sophisticated search marketers are already looking at ways to move beyond text-based results and start using search as a full-funnel marketing channel.

We’ve been talking about visual search mostly in the context of smartphones, as currently that’s the technology most immediately suited to searching the physical world, given that all smartphones these days have built-in cameras.

But what about other gadgets? We’re seeing a lot of companies at the moment who are developing smart glasses or AR glasses – Snap, Intel, Toshiba came out with a pair just a few months ago – could visual search find a natural home there?

I’m not sure we’ve seen the end of Google Glass, actually. I really don’t think Google is finished in that area and it does make sense to have visual search incorporated directly into our field of vision.

The most likely area to take off here in terms of usage in the short-term is actually for the visually impaired. There are smart glasses that use artificial intelligence (AI) to perform visual searches on objects and highlight immediately what they are seeing.

Those are from a company called Poly, who are doing a range of interesting things in this space.

We think of devices that we wear or actively use, but that may not even be the long-term future of visual search. Poly has also developed visual search technology that works in stores. It can keep track of inventory levels automatically, but also detects who is in the store by linking with the Bluetooth connection in their phone.

Things like face IDs on smartphones along with Apple/Google Pay really help to create this potential use.

So the visual search exists at a higher level, it detects who is in the store, and it adds items to their basket as they pick them up. When the person leaves, they are charged via Apple/Google Pay or similar. So a bit like the Amazon Go stores, but using visual search to scan the store and see who is there and what they buy.

The cost for doing this has reduced dramatically, so it would now be possible for smaller stores to engage with this technology. Where that has potential to take off is in its introduction of a friction-free shopping experience.

That’s just one potential use, but it highlights how visual search can lead to much bigger opportunities for retailers and customers.

How close we are to that reality depends on people’s proclivity to accept that level of surveillance.

The most futuristic technology in the world is no good if no-one is using it, and we’ve seen much-vaunted tech advancements flop before – speaking of smart glasses, Google Glass is a good example of that. So what would you say are the immediate barriers to the more widespread adoption of visual search? What kind of timeline are we looking at for visual search entering the mainstream – if indeed it ever does?

With voice search, it was always stated that 95% accuracy would be the point at which people would use the technology. I don’t think there have been excessive studies into visual search yet, but that should come soon. With increased accuracy will come widespread awareness of the potential uses of visual search.

The short-term focus really has to be on making the technology as useful as it can be.

Once the technology gets closer to that 95% accuracy mark, the key test will be whether novelty use turns into habit. The fact that over 600,000,000 visual searches take place on Pinterest each month suggests we are quickly reaching that point.

It also has to be easy to access visual search, because the moments in which we want to use it can be quite fleeting.

From there, it will be possible for retailers, search engines, and social media platforms like Instagram and Pinterest to build out their advertising products.

As with any innovation, there is a point of critical mass that needs to be reached, but we are starting to see that with voice search and the monetization of visual search sits rather more naturally, I think.

We want to understand the world around us and we want to engage with new ideas; images are the best way to do this, but they are also a difficult form of communication.

Our culture is majoritively visual and has been for some time. We need only look at the nature of ads over the past century; text recedes as imagery assumes the foreground in most instances.

Whether the Lens technologies are an end in themselves or just a stage in the development of visual search, we can’t be sure. There may be entirely new technologies that sit outside smartphones in the future, but image recognition will still be central.

I would still encourage all marketers to embrace a trend that only looks likely to gather pace.

Visual search is still quite an abstract concept for most of us, so is there anything practical that marketers and SEOs can do to prepare for it? Is it possible to optimize for visual search just yet? If marketers want to try and keep ahead of the visual search curve, what would be the best way to do that?

Any time we are dealing with search, there will be a lot of theory and practice that can help anyone get better results. We just don’t have the shortcuts we used to.

When it comes to visual search, I would recommend:

- Read blogs like Pinterest engineering. It can seem as though these things work magically, but there is a clear methodology behind visual search

- Organize your presence across Instagram, Google, Pinterest. Visual search engines use these as hints to understand what each image contains.

- Follow the traditional image search best practices.

- Analyze your own results. Look at how your images perform and try new colors, new themes. Results will be evermore personalized, so there isn’t a blanket right or wrong

- Consider how your shoppable images might surface. You either want to be the item people search for or the logical next step from there. Look at your influencer engagements and those of your competitors to see what tends to show up

- Engage directly with creative teams. Search remains a data-intensive industry and always will be, but this strength is now merging with the more creative aspects. Search marketers need to be working with social media and brand to make the most of visual search

- Make it easy to isolate and identify items within your pictures. Visual search engines have a really tough job on their hands; don’t make it harder for them

- Use a consistent theme and, if you use stock imagery, adapt it a bit. Otherwise, the image will be recognized based on the millions of other times it has appeared

- Think about how to optimize your brick-and-mortar presence. If people use products as the stimulus for a search, what information will they want to know? Price, product information, similar items, and so on. Then ensure that you are optimized for these. Use structured data to make it easy for a search engine to surface this information. In fact, if there’s one thing to focus on for visual search right now, it is structured data.

Check our Clark’s presentation on visual search here.